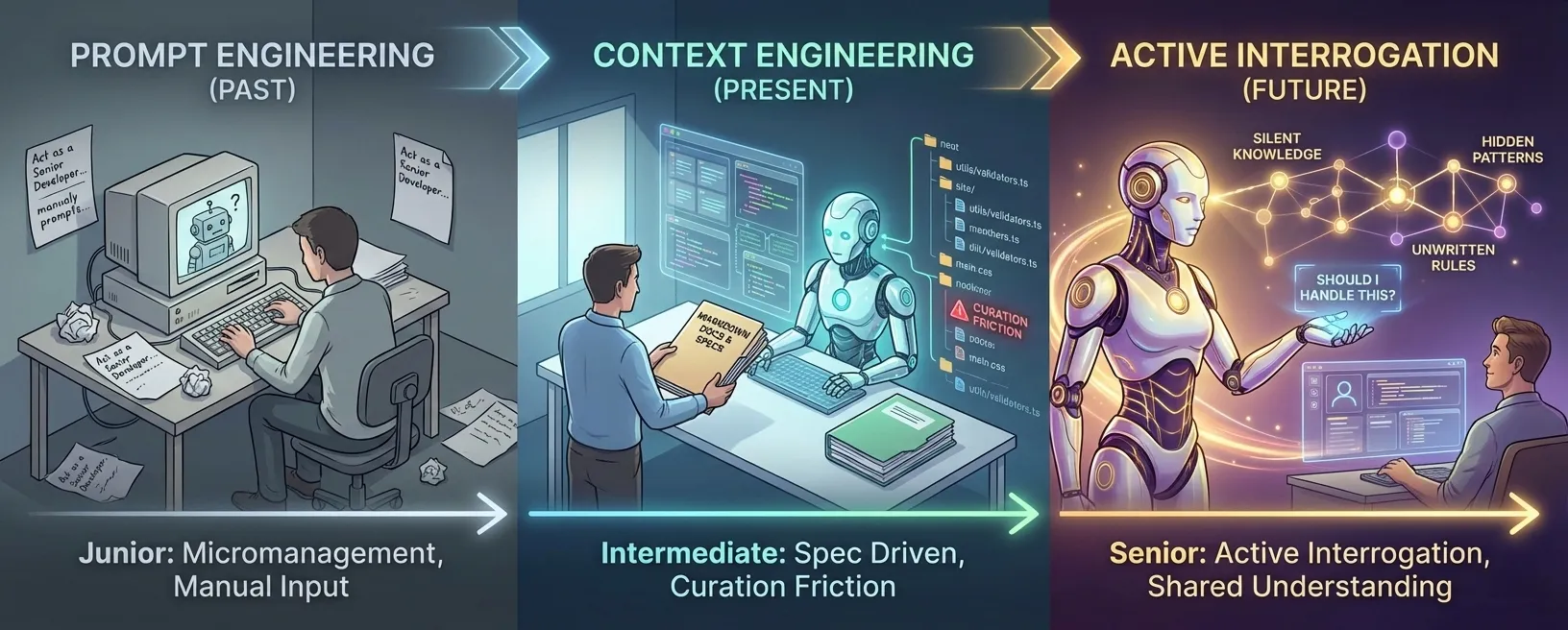

Senior AI Agents: True Intelligence is Instructions Discovery

We spent years mastering prompts, then we mastered context. But a true Senior Developer doesn't wait for the perfect context. They discover the hidden instructions you forgot to give.

The Era of Prompt Engineering

Two years ago, we were stuck in the era of Prompt Engineering. We believed the magic spell was in the phrasing: “Act as a Senior Developer…”

This was pure micromanagement. We weren’t delegating. We were puppeteering the model, trying to coax the right output through trial and error. The human was the engine, and the AI was just the interface.

The Era of Context Engineering

Today, we have moved past that. We are now in the era of Context Engineering.

We don’t just ask; we prepare. We use robust specifications, Markdown documentation, and custom skills. We treat the AI like a talented contractor. We can delegate tasks effectively, provided we hand over a perfectly organized dossier first.

This approach works incredibly well. It allows for “Spec Driven Development” where we define the what and the AI handles the how.

But there is still a friction point: Curation. The AI is a genius, but it is often an isolated genius. It has tools to find files, but if the project uses unconventional naming, or if the instructions are slightly off, it gets lost. Worse, it often generates “alien code”, logic that works perfectly but looks nothing like the rest of your codebase because it wasn’t explicitly shown the local patterns. The human is no longer a micromanager, but they must still be a perfect Librarian.

The Three Stages of Evolution

To understand where we are going, we need to map the evolution of “Agentic” capability not by how much code they write, but by how much context they can find on their own.

1. The Copy-Paste Junior

- Input: “Write a function to validate emails.”

- Behavior: It outputs a perfect regex function.

- The Trap: It didn’t know you already had a

utils/validators.tsfile. You have to manually copy-paste the code, import it, and wire it up. If you don’t provide the exact file context, the agent is effectively blind to your repository.

2. The Isolated Specialist (Intermediate)

- Input: “Change the primary button color to blue.”

- Behavior: It searches the file tree, finds

main.css, and adds a custom.button-blueclass. The task is marked as complete. - The Trap: It missed that the project uses Tailwind. The code works visually, but it violates the project’s architectural patterns. It created “alien code” because you forgot to explicitly prompt “Use Tailwind”. The agent found the file, but it missed the pattern.

3. The Context Hunter (Senior)

- Input: “Add a new leaderboard feature.”

- Behavior:

- Interrogates: “How do we currently handle high-traffic writes?”

- Discovers: “I see we don’t write game events directly to Postgres. We use a Redis write-behind pattern to protect the DB.”

- Identifies: “I also noticed we denormalize user scores into a JSONB column on the

Usertable for performance.”

- The Result: Instead of creating a simple (and slow) SQL table, it proposes a Redis Sorted Set implementation that syncs asynchronously to the DB, perfectly matching the unspoken “hardcore performance” culture of the team.

It’s Not About the Context Window

There is a belief that bigger context windows (1M, 10M tokens) will solve the Curation problem. “If I can just feed the entire codebase into the prompt, the AI will know everything.”

This is the equivalent of handing a new employee a 5,000-page manual on their first day and expecting them to be productive.

Seniority isn’t about knowing everything (Memory). It’s about knowing what to ask (Discovery). A Senior Agent doesn’t read 10,000 files. It reads the right 5 files. It runs the test suite to see what breaks. It checks the linter config to see what is forbidden. It performs Active Interrogation.

The Final Frontier: Silent Knowledge

The hardest instructions to follow are the ones that are never written down.

- “We don’t use ORMs in the payment service because of latency concerns.”

- “We never delete data, we only soft-delete.”

- “That variable is named

tempbecause it was supposed to be temporary in 2019.”

A Junior Agent sees deleteUser() and uses it.

A Senior Agent sees deleteUser(), notices it hasn’t been called in 2 years, checks the database schema, sees a deleted_at column, and pauses.

“Are you sure you want to use this function? It looks like dead code. The pattern here seems to be soft-deletion.”

Conclusion

The future of AI Agents isn’t about them understanding our instructions better. It’s about them understanding us better. We don’t need agents that blindly execute our commands. We need agents that are smart enough to look around, realize we forgot something, and say:

“I think you missed this. Should I handle it?”